What I’m Building With AI

Tools for productivity and agentic AI.

(1) AI As A Productivity Tool

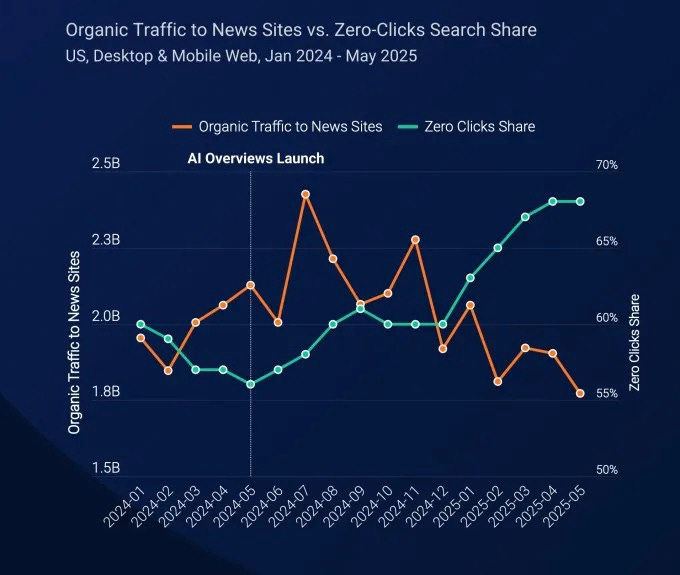

One of the things I’m most excited about with AI is the potential to use it for personal productivity. AI - at least in some charts I’ve seen - is beginning to eat away at Google’s search engine throne. And now I can see one reason why.

Learning the best practices of Agentic AI for pre-sales is a struggle because everything is changing so quickly.

An AI Architect I met at a networking event in downtown Chicago reflected this sentiment. This is what he had to say:

#1 challenge we’re trying to solve is how do we keep up with the pace of change in AI and our product simultaneously. As architects, we typically focus on giving customers “best practice” guidance, but it is more challenging with agentic AI as a pretty new field overall and things will look so different as everything continues to evolve. We are simultaneously helping customers form their overall AI strategies at the same time as educating them on what our company can do.

There’s a laundry list of great newsletters out there that this AI Architect and I could subscribe to.

Last Week in AWS does a great job sending weekly updates about the AWS ecosystem.

Salesforce Ben does a similar thing for the Salesforce ecosystem.

I’m subscribed to Pivot to AI, which sends quality AI-specific content.

PulseMCP is also solid, but very niche to the Model Context Protocol.

All these are all great resources. But none cover what I, Grant Harris Varner, need: the intersection of emerging Agentic AI use-cases from the AI research labs (Anthropic, Open AI, Perplexity) with best practices of the Salesforce ecosystem.

So I made it… with AI. Here’s how.

Meta Prompting

Prompt Engineering Guide - a fantastic resource on all things prompt engineering - defines Meta Prompting as:

Meta Prompting is an advanced prompting technique that focuses on the structural and syntactical aspects of tasks and problems rather than their specific content details. This goal with meta prompting is to construct a more abstract, structured way of interacting with large language models (LLMs), emphasizing the form and pattern of information over traditional content-centric methods. Source

In layman’s terms, it’s when you ask AI for some help generating a prompt - in this case a personalized newsletter. So I told a few chats to tell Chat GPT exactly what I wanted and it gave me this output.

Then I plugged that prompt into a fresh Chat GPT window.

Integrate Gmail

This newsletter required to integrate my Chat GPT account with Gmail. Which was super easy to do. I just opened Settings > Connectors > Gmail. What I didn’t realize is that in doing this, Chat GPT can also scrape my Gmail inbox for answers. Not sure what time cocktail hour is my buddy’s wedding in Columbus? Just ask Chat GPT.

What I Learned

This brief experiment told me a few things. First, it’s easy to build things with AI. And truthfully, it makes me wonder about the future of social media. On one hand, the internet is flooded with bots and AI making dead internet theory increasingly real. In part, it makes me worried as a guy who’s put a lot of my “5-9pm” energy into writing. But then another part of things thinks that with so much AI slop on the internet, being human carries a premium. And being a top 1% writer will pay dividends. I guess we’ve yet to find out on that hunch.

(2) Enterprise Agentic AI

Unlike personal productivity tools you or I might build with Chat GPT, enterprise Agentic AI use cases are a different beast - especially for regulated industries. There’s three differences.

Scale

When you and I use Chat GPT, we’re typically using one-off chats with varying levels of complexity. Because of the small scale, using a ton of conversations isn’t a massive “uh oh”. By contrast, companies using Salesforce Agentforce, for example, pay by consumption. So if you use an AI Agent to solve a repetitive task that could be solved with Salesforce Flow, Apex, or actual business logic - you could be looking at a massive bill at the end of the year.

Trust / Compliance

Whether you use Chat GPT, Claude, Gemini, whoever, you can theoretically now allow them to store and use your chat for training data.

But for business leaders, the idea of plugging in sensitive customer data into an LLM not owned by that company isn’t what an IT leader wants. I actually met an IT Leader of a regional bank after church and he echoed a similar sentiment. Rather than jumping in with two feet, they’re trying small, internal pilots, using home grown agents to see how this stuff helps.

Data Readiness

A significant pain point of enterprise Agentic AI pilots has its root in the readiness (or lack thereof) of their data. Whereas I can just ask Chat GPT: “Write me a follow up sales email for Acme Corp. We finalized the terms for the proposal and will find a time next week to get the buying committee on a call to review.” Enterprise prompt builders insert customer data within the prompt. If you have bad enterprise CRM data (most do).

The Irony of Being an AI-Obsessed Writer

There’s a touch of irony that comes with building a personal brand around AI, while simultaneously growing as a writer. Writers I’ve met online have unanimously talked about the benefits of creating without LLMs. And I agree.

Without LLMs, your brain has to make the connections between ideas. Rather than outsourcing that work to NotebookLLM, Chat GPT, or Claude, your brain has to do the work. It’s sort of like squatting 700 lbs with a squat suit. Sure, you’re squatting 700 - but are you really?

You might be able to produce human-like essays with Chat GPT. Trust me, I tried with this little content experiment at my last startup gig.

Re-reading those essays now, I realize that:

AI writing, even my own, is not as good as what I’ve written from the brain.

Writing with AI causes me to lose out on the neural connections that come with real human writing.

As Jack Raines pointed out, Open AI is paying nearly $400k per year for a human writer. There must be something to real humans actually writing vs. outsourcing that to AI.

In Closing

Several months ago, this article was going viral here on Substack. The collective fear of the community was that AI is making us dumber. And yet that fear - while not completely unfounded - might be a hyperbole.

In 2008, the front cover of The Atlantic asked “Is Google making us stupid?”

When new tech comes out, it makes us more efficient, enabling us to accomplish more with less, and to focus on more strategic tasks. Do you feel any more stupid since using Google?

— Grant Varner

Quick Note From Me

Last month I was let go from my job. Let’s test out the power of the internet - and all 73 subscribers of you. If you know anyone in tech in Chicago area, can you make an introduction? Muchos gracias. 🙂